Reliable Data Orchestration for AI Applications

At their core, AI applications are data-driven.

As Apache Airflow has become the standard for both data processing and ML pipelines, it’s become a natural foundation for building AI applications. With Astronomer, companies of all sizes in the evolving AI landscape have an easy-to-use platform to build advanced data workflows.

In this blog post, we discuss how Dosu — an exciting new company in the AI space — uses Astronomer to manage its data pipelines, and how Dosu supports the Astronomer team in helping maintain Cosmos, which simplifies how data engineers integrate dbt with Airflow.

Dosu — Everyone’s AI Engineering Teammate

Dosu is an AI teammate that helps engineers develop, maintain, and support software.

Like an engineer, Dosu uses its understanding of code and code changes to answer undocumented questions, triage incoming issues, write documentation, and more. Dosu integrates into existing workflows and is available in GitHub, Slack, Jira, and anywhere else product development happens.

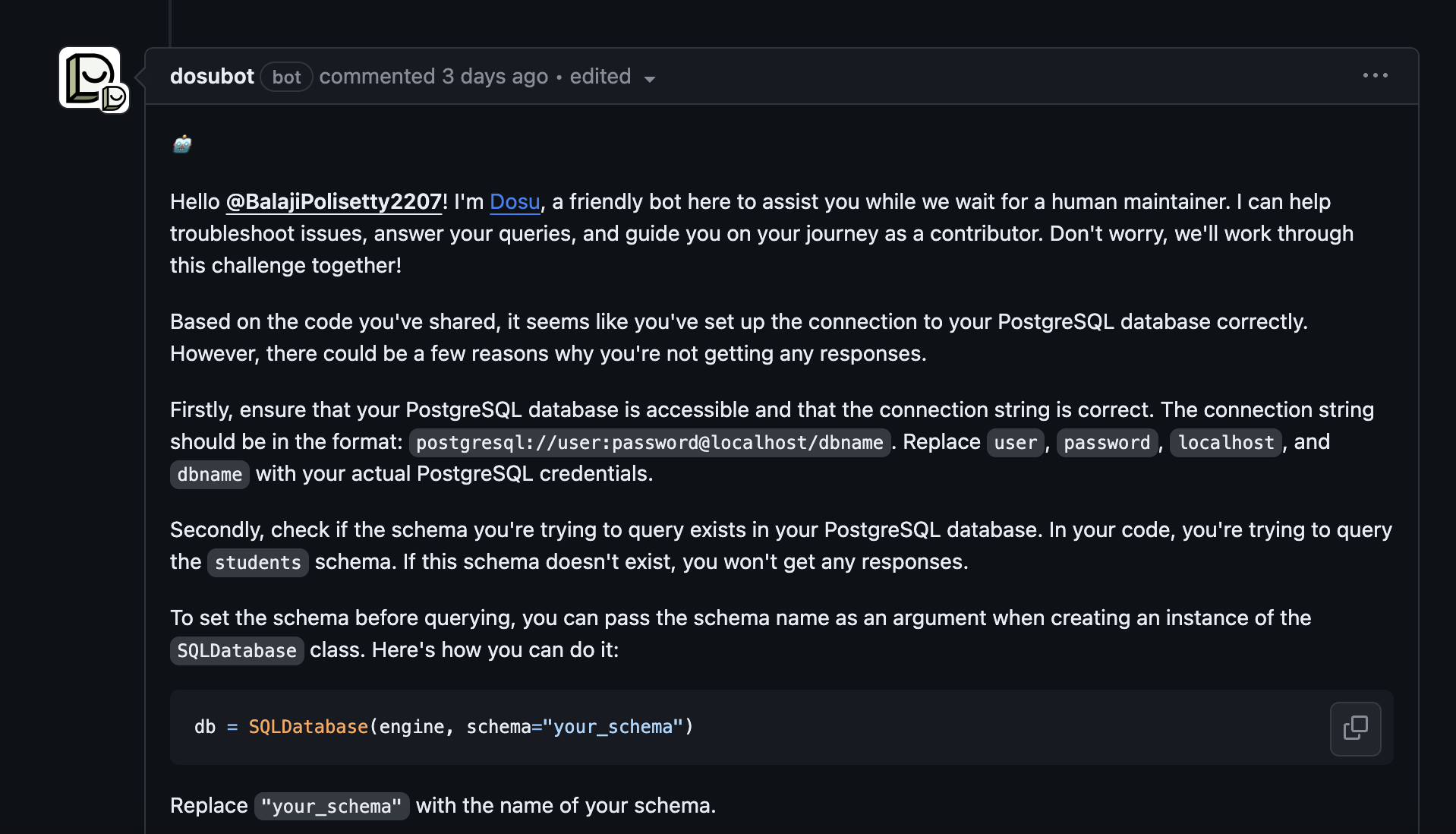

Regular support and maintenance are an essential part of open source software, making it a perfect match for Dosu. Dosu’s GitHub integration helps maintainers label, respond, and manage GitHub issues, discussions, and PR. Dosu is active across dozens of open source communities and has already helped unblock thousands of developers, empower new contributors, and save valuable time for maintainers.

AI Applications Start with Data

In order to provide useful and accurate responses, up-to-date relevant data is integral to an AI-based platform like Dosu.

The small engineering team at Dosu manages a staggering number of regularly-running data pipelines to ingest and index information. With thousands of developers depending on Dosu, these data pipelines need to be reliable, easy to maintain, and easy to monitor.

As a former data engineer at Airbnb, Mark Steinbrick, an early engineer at Dosu, knew right away that they should use Airflow. The Dosu team realized that even though AI technology has rapidly changed, data orchestration requirements of AI applications have not. Airflow was a tried-and-true choice and Astronomer was the perfect partner. Astronomer made standing up Dosu’s production pipelines a breeze and there was no infrastructure for the team to worry about.

Astronomer at Dosu

From one-time backfill jobs when onboarding a new data source to scheduled ingestion and indexing, Dosu uses Astronomer as the backbone of its data operations.

Airflow’s versatility also allows Dosu to have a single tool powering its production AI application and its traditional customer-facing analytics reporting.

The Astronomer platform provides an out-of-the-box unified interface for observability and debugging. This has been a critical feature for the Dosu team as they’ve rapidly iterated on the product and scaled their data operations.

The Dosu team maintains around a dozen critical DAGs that broadly fall into 4 categories: data ingestion, document indexing, metric generation, and ad-hoc data workflows.

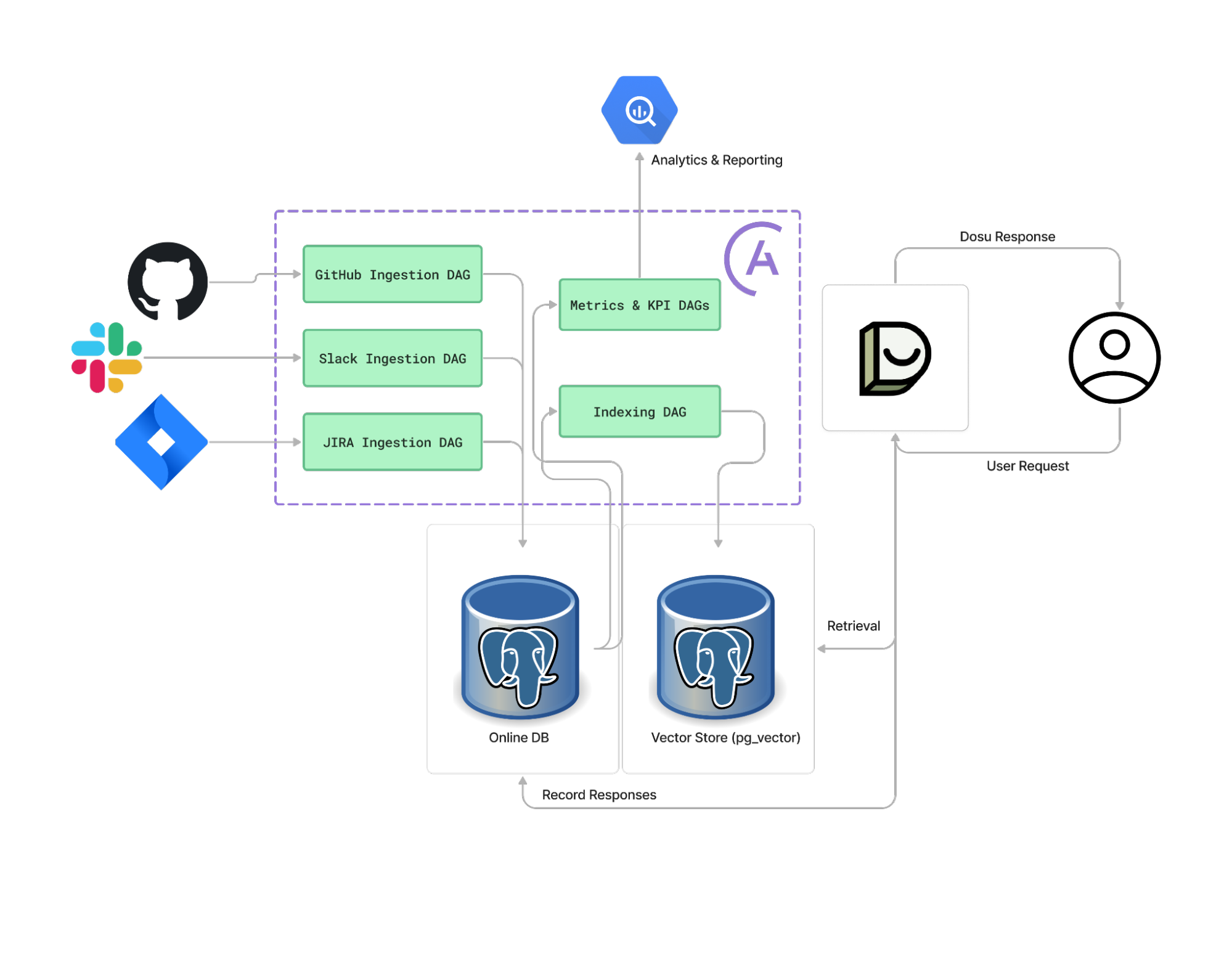

Below is a high level diagram of how Dosu uses Astronomer to ingest 3rd party data, index that data, and create metrics from its responses for analytics and reporting.

Each 3rd party data provider has a dedicated DAG that handles the nuances of that provider’s API, applies transformations onto the incoming data, and stores the resulting data into a Postgres. From there, another DAG runs which indexes all this information into a separate Postgres instance which, with pg_vector, serves as Dosu’s vector store.

When Dosu handles a user query, it retrieves relevant contextual information from the vector store and processes that information through its reasoning engine to give a response. These responses are written back to the database where another set of DAGs uses them to generate metrics and KPIs.

Each of these DAGs is made up of a complex set of tasks which have changed rapidly as new best practices for generative AI applications have emerged. With Astronomer, Dosu’s engineering team was able to focus on the business logic of these tasks without having to worry about the additional burden of scalability and observability.

Dosu for Cosmos

Born out of an Astronomer hackathon, Astronomer’s Cosmos is an open source utility that enables Airflow developers to integrate dbt projects into Apache Airflow, providing visibility, connection management, and easy setup for data orchestration tasks.

“Given that Cosmos came from a hackathon, from day one we weren’t staffed to fully support it. Issues were being raised, PRs were being contributed, and it was tough to keep up. Dosu has been helpful in the triaging process as it allows us to give contributors and community members an instant response.”

— Julian LaNeve, CTO, Astronomer

As Cosmos continued to grow in popularity, the Astronomer team knew keeping the project well maintained and the community engaged would be crucial to its long-term success. When Dosu reached out, the team at Astronomer had already been thinking about how AI could help strengthen open source communities and lighten the support workload for maintainers. Astronomer CTO, Julian LaNeve, had already prototyped an AI-powered OSS sidekick, and immediately grasped the potential for a product like Dosu, and early results using Dosu have been very promising. The community loves the organization the auto-labeling brings to issues and PRs. Dosu has even helped users understand, debug, and ultimately resolve issues without any maintainer interaction.

Conclusion

The Astronomer and Dosu partnership is a natural fit. With Astronomer powering its data operations, Dosu’s product velocity has accelerated. In return, Dosu’s new features free up the Astronomer team’s valuable time to further improve their platform, which Dosu continues to benefit from.